A new tool has come to the open market, ChatGPT. ChatGPT is an implementation of OpenAI’s third release of their Generative Pre-trained Transformer (GPT-3). GPT-3 in and of itself is an unsupervised transformer language model. In plain, it’s a word generator. Word generators have been around since early computing, so why is this one different? Why do we care as security-minded individuals?

If we take ChatGPT at face value, it’s a chat-bot. It takes input from the user and produces output in natural language that the user can then read and interpret. What makes ChatGPT (and the GPT-3 model itself) important is its sophistication and usability. With little to no knowledge of any given topic, the user can prompt ChatGPT to write volumes of text on that topic quickly. There are plenty of YouTube examples of how to write essays, books, dad-jokes, etc.

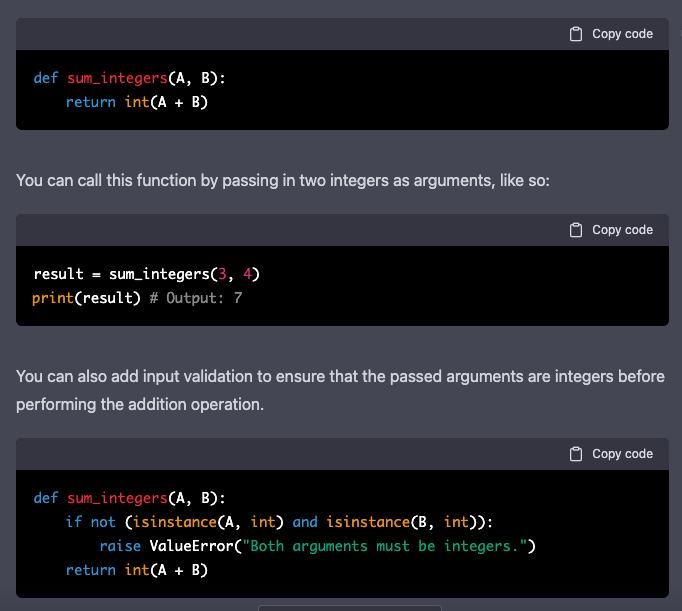

For the purposes of this post, ChatGPT can produce code. While most “common” use cases of ChatGPT require no prior knowledge of a given domain, utilizing ChatGPT to write usable code requires a bit of domain expertise. Given the a well constructed input “write a function in python that takes integer parameters A and B and returns their sum as an integer”, it produces the following output:

That is an impressive tutorial not only on how to write the function, but also how to use it and how to improve on the simple base case.

There is a tremendous amount of power in a tool such as ChatGPT. And as we know, with great power comes great responsibility! As a cybersecurity risk management professional, this type of code generation sets off my spidey-sense.

For years, many programmers (myself included) have turned to online tools and repositories (such as Stack Overflow) to find answers to common problems. The example code is then copy and pasted into production environments. On one hand, it creates a type of “bottom up” code standardization that isn’t necessarily a bad thing. On the other hand, it can lead to common vulnerabilities in many code bases.

With the current tech crunch, there are fewer developers to program the same amount of features. This pressure will lead to programmers looking for ways to make efficient use of their time. ChatGPT is a powerful tool in the right hands. It is possible to write entire applications using only ChatGPT. With this method of code generation, there is a potential to introduce vulnerabilities into your code that the programmer doesn’t necessarily understand because they didn’t write it (like Stack Overflow on steroids).

As we continue to push developers to produce more code, we must support them with rigorous processes. When developing code, OWASP Top 10 or the National Institute of Standards and Technology Secure Software Development Framework (NIST SSDF) are great resources. When evaluating code, vulnerability scanners and static analyzers are great tools. Having a streamlined deployment processes (DevOps, Continuous Integration, Continuous Deployment) is key to pushing updated code if a vulnerability is found. All of these things can be wrapped in a risk management process that prioritizes actions for your organization. While this may seem like a lot, if the processes are developed and implemented ahead of a crisis, they can help create efficient development cycles.

Using ChatGPT to supplement your human code generation can be a way to quickly get structure and boilerplate code out into your development environment. Using it as the main method of code generation and evaluation is rife with risk. Tread lightly friends.